I'm currently analysing data on what will probably be the final paper from the project I joined the lab to work on four years ago. This project was to look at the effect of a specific nerve lesion on behavior and function at multiple levels. We've looked at performance (how well the function is achieved), kinematics (how the structures which perform the function move), and neuromuscular physiology (when the muscles which perform the function are active). For this last paper I'm attempting to synthesize these different strands of data to understand something about mechanisms, and if they could potentially be targets for intervention.

On my end, the synthesis has taken months of lining up different data sets, verifying they are properly synchronized, figuring out discrepancies. It's been painstaking. Most days I've had between two or four spreadsheets open, generally half of which are metadata telling me how two different data sources line up. As I was reaching the end of this process, finally getting close to the dataset I needed for my analysis, it struck me that this was data we'd gathered two years ago. I'd been working on it for over a year. Furthermore, the datasets I was working with weren't raw data, they were themselves measurements of muscle activity and performance that former techs and summer students had also spent months to years working on. Cumulatively, the person hours spent on the data set I was assembling was staggering.

There is a paper in review from our lab right now whose entire results section is summarized in one very simple, very elegant graph. Six means with error bars. Those six means with error bars represent two months of caring for over twenty baby animals. Hours of understanding what our measurements meant. Hours of students and techs pouring over videos and chart recordings. Cross checks and visualizations and arguments and sick animals and broken equipment, all distilled down to one figure, six points.

My dissertation papers are the result of four years of work. I know this because I'm the only person that worked on them, and they took me four years. But if that is true, then this paper, that has in some ways also taken me four years, yet builds on the work of close to half a dozen people, is the result of two or three times that many years. And it will be maybe eight pages long, at most?

Academic papers, with their brevity and elisions, their straightforward narratives, are poor monuments to the sheer time consuming, physical work that goes into their production. The only clue in an academic paper of how many hours have been spent on it lies in the length of the author list. Those unsung middle authors are the only monument we allow to the effort that goes into our sleek, polished productions. And maybe, that is not good enough.

Showing posts with label philosophy of science. Show all posts

Showing posts with label philosophy of science. Show all posts

Wednesday, 25 October 2017

What goes into a paper

Labels:

papers,

philosophy of science,

postdoc,

productivity,

random musings,

science,

Writing

Sunday, 31 July 2016

A messy machine

This week in journal club, we revisited a classic paper of evolutionary biology, Stephen J. Gould and Richard C. Lewontin's famous/infamous The Spandrels of San Marco and the Panglossian Paradigm: A Critique of the Adaptionist Programme, a paper that, among other things, has made amateur architectural history something of a cottage industry among evolutionary biologists. "Spandrels", as it is often known, is almost impossible to discuss as a scientific paper among biologists of a certain age.* It is a cultural artefact, a signifier of its times. Any discussion of "Spandrels" immediately becomes a historiography, an attempt to, on the one hand, understand it in its context that is no longer around us, and, on the other, detect its influence on the different world in which we now live. "Spandrels" is, depending on who you talk to, either the hallmark of a small but significant paradigm shift in evolutionary biology, or the beginning of an unhelpful tangent that has needlessly distracted evolutionary biologists for decades.

But taken on its own terms, "Spandrels" is a bizarre thing. Certainly, it is a kind of paper we would be unused to seeing today. It is a straight up, unapologetic, highly (and variably effectively) rhetorical polemic. As its name implies, it is a critique, not of data, but of ways of thinking. "Spandrels" is all argument, no new data.**

What I think is most interesting about "spandrels" in that regard is the insight it gives us into the messy machine that is science. Specifically, it challenges simplistic notions about what science is.

In her speech accepting the nomination for presidential candidate for the Democratic party, Hillary Clinton said at one point "I believe in Science". The reaction among scientists (on social media, because that's how I gauge reactions among scientists) was divided into two camps. One we shall call camp relief (as "Thank you, thank you, an existential dread has been slightly lifted from my shoulders"). The other we shall call camp epistemological frustration, best exemplified by this facebook response. Specifically point 2 "Rather, science is a philosophical approach to understanding one's world - one which is rooted in doubt, skepticism and formal testing methods". To which I would add only one thing:

"science, among other things, is a philosophical approach to understanding one's world - one which is rooted in doubt, skepticism and formal testing methods"

Because science is also a social activity, undertaken by people (Stephen J. Gould and Richard Lewontin) supported by institutions (Harvard University, the Royal Society of Great Britain). And these are very much things we can believe (in the sense of have faith) in. Science is values, such as assumptions of good faith (sorely tested of late) and "nullius in verba". Science is also norms of ethics and even of esthetics. Some will argue that these things are ancillary to science's epistemological foundation. Which I think is nonsense. Unless you want to subscribe to some Walden-esque neo-Rousseauian vision where science is limited to ascertaining the depth of ponds we can measure by our own, the social and institutional structures of science, and the Trust which underpins them, are as crucial to science as any theory of knowledge. For without them, no aggregate, collaborative, progressive, growing science is possible.

Science is a messy machine, and like all messy machines we are tempted to make it seem simple, But when we say "science is self correcting", it is like when we say "markets find solutions", or "the eventual overthrow of the Bourgeoisie is an inevitable consequence of capitalism". It makes complex, non linear, messy human processes seem cleaner than they are. And in the process, it obscures all the awful things that humans do when they feel they actions are not being judged.

Because the attitude that limits science to an abstracted epistemological process, the attitude that obscures the social structures that allow science to work, even when it is for the best of reasons, is cousins to the attitude that tells people of color, and women, and disabled folk, and gay folk, and people from developing countries, that the barriers they face are not part of science.

Only an inclusive definition of science, that includes as parts of science the epistemology as well as the institutions, and ultimately, the people DOING the science, can argue that the structural inequalities of science are scientific problem. And to solve those structural inequalities, we must believe they are a problem, and we must believe we can fix them.

*I've noticed with some amusement, being at the tail end of that generation, that younger biologists are completely nonplussed by the paper's tone and cultural importance.

** In that respect, it is very similar, and knowing Gould I suspect this is no coincidence, to Simpson's slim Magnum Opus Tempo and Mode in Evolution

But taken on its own terms, "Spandrels" is a bizarre thing. Certainly, it is a kind of paper we would be unused to seeing today. It is a straight up, unapologetic, highly (and variably effectively) rhetorical polemic. As its name implies, it is a critique, not of data, but of ways of thinking. "Spandrels" is all argument, no new data.**

What I think is most interesting about "spandrels" in that regard is the insight it gives us into the messy machine that is science. Specifically, it challenges simplistic notions about what science is.

In her speech accepting the nomination for presidential candidate for the Democratic party, Hillary Clinton said at one point "I believe in Science". The reaction among scientists (on social media, because that's how I gauge reactions among scientists) was divided into two camps. One we shall call camp relief (as "Thank you, thank you, an existential dread has been slightly lifted from my shoulders"). The other we shall call camp epistemological frustration, best exemplified by this facebook response. Specifically point 2 "Rather, science is a philosophical approach to understanding one's world - one which is rooted in doubt, skepticism and formal testing methods". To which I would add only one thing:

"science, among other things, is a philosophical approach to understanding one's world - one which is rooted in doubt, skepticism and formal testing methods"

Because science is also a social activity, undertaken by people (Stephen J. Gould and Richard Lewontin) supported by institutions (Harvard University, the Royal Society of Great Britain). And these are very much things we can believe (in the sense of have faith) in. Science is values, such as assumptions of good faith (sorely tested of late) and "nullius in verba". Science is also norms of ethics and even of esthetics. Some will argue that these things are ancillary to science's epistemological foundation. Which I think is nonsense. Unless you want to subscribe to some Walden-esque neo-Rousseauian vision where science is limited to ascertaining the depth of ponds we can measure by our own, the social and institutional structures of science, and the Trust which underpins them, are as crucial to science as any theory of knowledge. For without them, no aggregate, collaborative, progressive, growing science is possible.

Science is a messy machine, and like all messy machines we are tempted to make it seem simple, But when we say "science is self correcting", it is like when we say "markets find solutions", or "the eventual overthrow of the Bourgeoisie is an inevitable consequence of capitalism". It makes complex, non linear, messy human processes seem cleaner than they are. And in the process, it obscures all the awful things that humans do when they feel they actions are not being judged.

Because the attitude that limits science to an abstracted epistemological process, the attitude that obscures the social structures that allow science to work, even when it is for the best of reasons, is cousins to the attitude that tells people of color, and women, and disabled folk, and gay folk, and people from developing countries, that the barriers they face are not part of science.

Only an inclusive definition of science, that includes as parts of science the epistemology as well as the institutions, and ultimately, the people DOING the science, can argue that the structural inequalities of science are scientific problem. And to solve those structural inequalities, we must believe they are a problem, and we must believe we can fix them.

*I've noticed with some amusement, being at the tail end of that generation, that younger biologists are completely nonplussed by the paper's tone and cultural importance.

** In that respect, it is very similar, and knowing Gould I suspect this is no coincidence, to Simpson's slim Magnum Opus Tempo and Mode in Evolution

Sunday, 20 December 2015

The walls are in our heads

We had a seminar the other day on optogenetics given by one of the junior faculty in the neuro side of our department. Those of us in the biomechanics side of things are increasingly interested in the sensorimotor processing necessary to regulate the complex musculoskeletal behaviors we observe. Like good physiologists, we want to be able to disrupt the systems to see how they respond. And the prospect of being able to alter sensory and motor signals reversibly and quickly is particularly intriguing. So we had a chat about it.

Talking with the neuro people is a stark reminder of disciplinary boundaries. Our basic questions overlap on the matter of animal behavior, yet diverge in where we focus our explanatory efforts. To caricature, we examine behavior and musculoskeletal systems, and treat the brain as a black box, and the neuroscientists examine behavior and the brain, and treat the musculoskeletal system as a black box. So things we take for granted, they often are unclear on, and vice versa.

As we were discussing the background of optogenetics, the names of Chlamydomonas and Volvox, the green algae from whose genome the photosensitive ion channel genes have been extracted, came up. Because of my dillettantish path through biology, I have fair amount of botany, and a lot of taxonomy, in my knapsack. And that same wandering path included a pretty extensive flirtation with both cellular physiology, and neuroscience as an undergrad. As we discussed the various components of optogenetics, the light gated ion channels, the promoter sequences, the virus delivery vector, different questions popped into my head. Why did the algae have light gated voltage channels (I'm assuming some sort of phototactic behavior)? Where transposon sequences used to insert the genes into the neurone genomes, so that they would be replicated along with chromosomes, or were they left as free floating strands of DNA? Of course, in our group of mammalian biomechanists and neuroscientists, no one really knew the answers to those questions. And to be honest, they probably couldn't have pulled Volvox out of a eukaryote line up.

I often quip that huge amounts of knowledge about Mus musculus is held by people who don't give a damn about mice. Likewise, many of the people who know about Xenopus development probably know very little about frogs. Model organisms, translational focus and systems based thinking lead to extensive study of organisms that is oddly divorced from an understanding of the organism qua organism. Evolutionary biologists do this too. Systematists famously know little about the biological uses of the various structures they use to construct cladograms. In fact there was a time when such knowledge was considered harmful to establishing relationships, and systematists proudly touted their lack of knowledge about organism function. And molecular biologists have often been not much better regarding the organisms whose genomes they code.

And yet, here, with optogenetics, we have a technology that is born of in depth knowledge of the physiology of single celled algae, the reproductive chemistry of viruses, and the control of genetic expression at the cellular level in mammalian neurons. None of these things are trivial. All of them are products of long research programs within subfields of biology. (The discovery and understanding of transcription factors alone was a huge revolution in cellular genetics, and the histroy of our understanding of viruses and single celled algae is equally fascinating).

It is true that discplines are necessary to provide depth of understanding. It is also true, as Michael Hendricks recently pointed out, that interdisciplinary research assumes the existence of robust, vibrant, INTERESTING disciplines. But for this interdisciplinaryness to occur, there must be people with enough curiosity about what is going on in the neighboring silo to, well, see a possibility for coupling viral vector technologies with voltage gated channels from algae. And when the results of interdisciplinary research become ubiquitous within a field, there are potential risks in remaining ignorant about those aspects of your technique that come from a different scientific history and background.

Without a minimum of curiosity about what's going on in the silo next door, interdisciplinary breakthroughs are impossible. And without a minimum of curiosity about interdisciplinary breakthroughs, our understanding of things we do in our own fields is more black box that we might like.

Talking with the neuro people is a stark reminder of disciplinary boundaries. Our basic questions overlap on the matter of animal behavior, yet diverge in where we focus our explanatory efforts. To caricature, we examine behavior and musculoskeletal systems, and treat the brain as a black box, and the neuroscientists examine behavior and the brain, and treat the musculoskeletal system as a black box. So things we take for granted, they often are unclear on, and vice versa.

As we were discussing the background of optogenetics, the names of Chlamydomonas and Volvox, the green algae from whose genome the photosensitive ion channel genes have been extracted, came up. Because of my dillettantish path through biology, I have fair amount of botany, and a lot of taxonomy, in my knapsack. And that same wandering path included a pretty extensive flirtation with both cellular physiology, and neuroscience as an undergrad. As we discussed the various components of optogenetics, the light gated ion channels, the promoter sequences, the virus delivery vector, different questions popped into my head. Why did the algae have light gated voltage channels (I'm assuming some sort of phototactic behavior)? Where transposon sequences used to insert the genes into the neurone genomes, so that they would be replicated along with chromosomes, or were they left as free floating strands of DNA? Of course, in our group of mammalian biomechanists and neuroscientists, no one really knew the answers to those questions. And to be honest, they probably couldn't have pulled Volvox out of a eukaryote line up.

I often quip that huge amounts of knowledge about Mus musculus is held by people who don't give a damn about mice. Likewise, many of the people who know about Xenopus development probably know very little about frogs. Model organisms, translational focus and systems based thinking lead to extensive study of organisms that is oddly divorced from an understanding of the organism qua organism. Evolutionary biologists do this too. Systematists famously know little about the biological uses of the various structures they use to construct cladograms. In fact there was a time when such knowledge was considered harmful to establishing relationships, and systematists proudly touted their lack of knowledge about organism function. And molecular biologists have often been not much better regarding the organisms whose genomes they code.

And yet, here, with optogenetics, we have a technology that is born of in depth knowledge of the physiology of single celled algae, the reproductive chemistry of viruses, and the control of genetic expression at the cellular level in mammalian neurons. None of these things are trivial. All of them are products of long research programs within subfields of biology. (The discovery and understanding of transcription factors alone was a huge revolution in cellular genetics, and the histroy of our understanding of viruses and single celled algae is equally fascinating).

It is true that discplines are necessary to provide depth of understanding. It is also true, as Michael Hendricks recently pointed out, that interdisciplinary research assumes the existence of robust, vibrant, INTERESTING disciplines. But for this interdisciplinaryness to occur, there must be people with enough curiosity about what is going on in the neighboring silo to, well, see a possibility for coupling viral vector technologies with voltage gated channels from algae. And when the results of interdisciplinary research become ubiquitous within a field, there are potential risks in remaining ignorant about those aspects of your technique that come from a different scientific history and background.

Without a minimum of curiosity about what's going on in the silo next door, interdisciplinary breakthroughs are impossible. And without a minimum of curiosity about interdisciplinary breakthroughs, our understanding of things we do in our own fields is more black box that we might like.

Tuesday, 18 August 2015

Why I read the introduction and discussion of papers

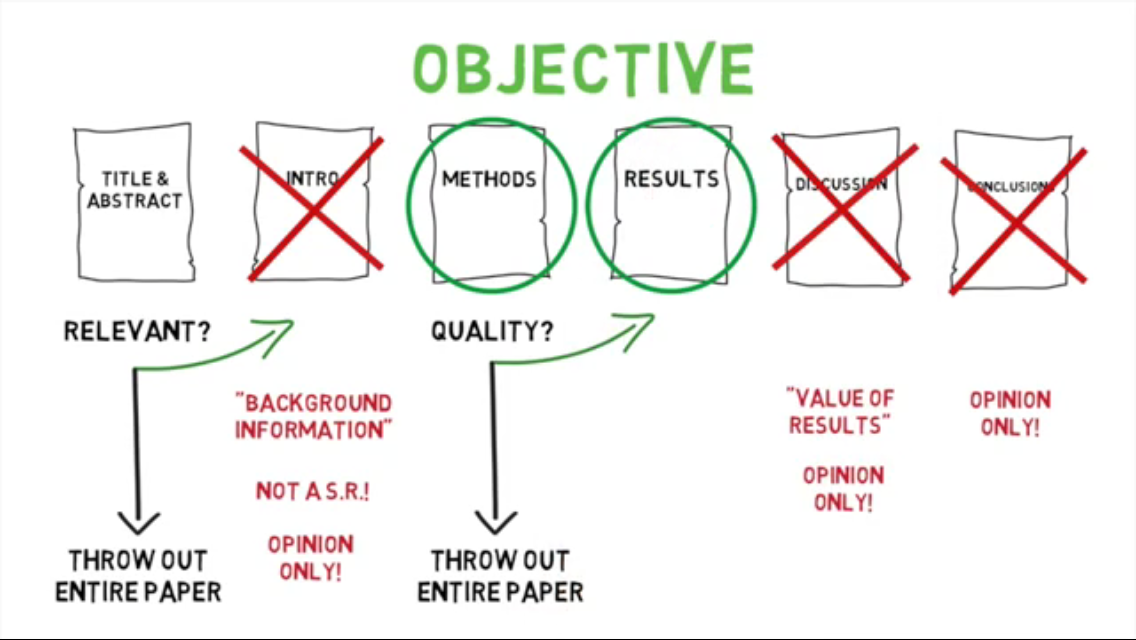

Over the past year, I've heard several times the sentiment that introductions and discussions of papers are not worth the .pdf memory they take up. Most recently, it took the form of the following cartoon passed around twitter.

|

| Cartoon by Anthony Crocco |

But I've had this discussion in person too, with assistant professors in our department. And I just don't get it. To me, a paper without the introduction and the discussion is almost literally nonsensical.

The intro, discussion, and conclusion have value because I don't view them as opinion, but as argument. The introduction should set up WHY the problem is interesting to the field, and why the approach chosen is relevant. This isn't just set up. It tells me a number of useful things: whether the person knows what they are doing with regard to the broader intellectual climate they are working in for one, but also, their thoughts on why the problem is important may not be my own. Their thinking, as detailed in the introduction, may modify, interrogate, change my own. And their thoughts about why the work is worth doing will guide 1) how they did it, and 2) what they intended to get out of it (which is important, because that set up will color how they present BOTH the methods and the results).

What is written in a paper is never just a simple narrative of the work done and the results obtained. That is a stylistic conceit. So knowing the set up is essential for critically assessing the "objective" parts (which are not so objective).

But it is the dismissal of the discussion that makes me saddest. The implication that the discussion can be discarded means that what the authors think of their results is irrelevant. There may be fields (particle physics perhaps) where the results are entirely unambiguous. I have yet to encounter a biological problem in which that is the case. Moreover, the non linear hierarchical interaction of biological systems (from molecules to cells to organs to organisms to behavior to ecology to evolution) mean that from an integrated biology perspective, I want to know about potential implications of the resultsfor connected elements of the biological hierarchy of organization. Again, a well crafted discussion is an argument, and a source of ways forward, not the spewing of opinion.

But perhaps what makes me saddest about the sentiment expressed in that cartoon is the solipsistic vision of science it produces. A focus on methods and results discounts the intellectual work, the scholarship done by your peers. It views others' work solely in light of how it might relate to one's own, and assumes that modes of thought about scientific problems are already so fixed, that nothing new will ever be found under the sun. This is not my experience of science.

In my field (evolutionary biology), one of the most important events that ever occurred was the Modern Synthesis. Over the course of ten to fifteen years, a disparate group of biologists came together to generate the modern understanding of evolution by natural selection, rooted in population genetics. The modern synthesis involved no ground breaking discoveries, and happened before we even properly understood the molecular mechanisms of heredity. The modern synthesis was the result of years of discussion and argument, culminating in, not a series of papers detailing new methods and new techniques, but in a series of books detailing a new way of thinking about biology. Crucially, it involved biologists from other fields understanding each other's work, despite being unfamiliar with each other's methods. If Mayr, Simpson, Dobzhansky, Huxley, and Stebbins had only engaged with their colleagues work through the schema of that cartoon, the modern synthesis would have been impossible.

In my field (evolutionary biology), one of the most important events that ever occurred was the Modern Synthesis. Over the course of ten to fifteen years, a disparate group of biologists came together to generate the modern understanding of evolution by natural selection, rooted in population genetics. The modern synthesis involved no ground breaking discoveries, and happened before we even properly understood the molecular mechanisms of heredity. The modern synthesis was the result of years of discussion and argument, culminating in, not a series of papers detailing new methods and new techniques, but in a series of books detailing a new way of thinking about biology. Crucially, it involved biologists from other fields understanding each other's work, despite being unfamiliar with each other's methods. If Mayr, Simpson, Dobzhansky, Huxley, and Stebbins had only engaged with their colleagues work through the schema of that cartoon, the modern synthesis would have been impossible.

Tuesday, 24 February 2015

Telling stories about the real world

Science is very good, on the whole, at what it does: establishing predictable facts about the natural world. It's probably fair to say that modern science, in so far as there is such a thing, is better at this than pretty much any other system that humans have ever devised. As scientists, we are often tempted to take other areas of human knowledge gathering to task for not behaving enough like science in their attitude to establishing facts about the world. We berate and bemoan the lack or misuse of evidence. We pour (sometimes deserved) scorn on the apparent flimsiness of other enterprises. In two very interesting posts, drugmonkey discusses why journalism and law can be so unappealing to the scientifically trained. I don't disagree with everything he says, and I think that certainly parts of both could stand to be held to more scientific standards of evidence.

And yet, I am always a little cautious about yelling "be more scientific" at people. Scientists as a whole have a bad a case of epistemological "PC gaming master race" syndrome (if I ever make a nerdier analogy, shoot me). We are convinced of the innate superiority of our means of knowing what we know. This view is hubris. On the one hand, as has been shown time and again by historians, philosophers and sociologists of science, it is remarkably difficult to pin down, at any time in history, a single definition of what constitutes the scientific method. What's more, efforts to do so have often lead to awkward situations, such as Karl Popper's continuous flip flopping over whether evolution by natural selection could be considered scientific. (One of the many reasons I dislike Karl Popper). On the other, there are entire areas of enquiry in which applying the many commonly accepted facets of the "scientific method" (hypothesis testing by experiment, replication) is difficult, impossible, or wrong headed. And no, I'm not talking about your position on omnipotent beings awkwardly obsessed with judging humans. I'm talking about the two things that are (not coincidentally) crucial in both journalism and courts of law: the reconstruction of historical events and the determination of people's internal states.

Wait, I here you cry, are you claiming history is not a science? Well, no. What I am claiming (and the philosopher Anton Schopenhauer got there first) is that one cannot apply an experimental framework to individual point event in the past, and furthermore that the range of applicability of hypothetico-deductive methods is much more limited. And when attempting to ascertain the truth behind a single event, in the past, never to be repeated (such as a current event, or a crime), what are we left with? Testimony, correlation, hearsay. Also known as a case. Also known as a story. Neither Baconian empiricism, nor hypothetico-deductivism, nor Popperian faslificationism, will help us here. At best, they will allow us to evaluate some (though by no means all) the evidence. In essence, the repeatable experiment is a tool for erasing the singular nature of point events (and it is much more difficult to do then often presented).

This exact problem is one that plagues my original discipline of paleontology. Paleontology is, in many ways, history writ large. Except it is not writ, more sort of left lying around. Many of the events we would like to understand are unobservable, their consequence inferred from disparate sources of evidence observed in the here and now. And the paleontological literature is rife with people grappling with the problem of just how scientific this endeavor is. And they run the gamut, from people twisting Popper's theory into an unrecognizable pretzel to accommodate paleontological historicity, to people consigning vast tracts of what is normally considered part of paleo to the dustbin of unscientific speculation. Neither approach is satisfying, and both end up running into absurdity and intellectual sterility. Paleontology is largely historical, as such the best we can do is marshal evidence for competing narratives, and go with the most plausible. Some of that evidence will fit with more prescriptive definitions of the scientific method, most (and I count most cases of fossil discovery in this category) will not.

As for the reconstruction of the internal states of a person at a given time and place, science isn't even close to figuring that one out. Yet we, as humans, are always in the business of trying to figure out what's going on in another person's head. And, in the West at least, it is literature that has grappled most directly with this problem, through what one could describe as thought experiments, but that we usually call novels. As the philosopher Mary Midgley once wrote:

Abandoning the notion that a one-size-fits-all epistemological framework derived from the physical sciences can be applied across all areas of possible human knowledge is not the same as saying "anything goes". Rather, it is a requirement that we be more rigorous, more critical, more demanding of the evidence and narratives presented to us given the constraints of what it is we are trying to understand and know. Indeed, the uncritical acceptance of a superficially science-y framework can be as dangerous for critical thought as any narrative-based understanding of the world (this is what Richard Feynman described as cargo cult science). We should have more that one tool in our epistemological kit. The world of things that are knowable is complex enough to need them.

PS: While researching this post, I found this delightful Schopenhauer quote we can probably all agree on:

"Newspapers are the second hand of history. This hand, however, is usually not only of inferior metal to the other hands, it also seldom works properly."

And yet, I am always a little cautious about yelling "be more scientific" at people. Scientists as a whole have a bad a case of epistemological "PC gaming master race" syndrome (if I ever make a nerdier analogy, shoot me). We are convinced of the innate superiority of our means of knowing what we know. This view is hubris. On the one hand, as has been shown time and again by historians, philosophers and sociologists of science, it is remarkably difficult to pin down, at any time in history, a single definition of what constitutes the scientific method. What's more, efforts to do so have often lead to awkward situations, such as Karl Popper's continuous flip flopping over whether evolution by natural selection could be considered scientific. (One of the many reasons I dislike Karl Popper). On the other, there are entire areas of enquiry in which applying the many commonly accepted facets of the "scientific method" (hypothesis testing by experiment, replication) is difficult, impossible, or wrong headed. And no, I'm not talking about your position on omnipotent beings awkwardly obsessed with judging humans. I'm talking about the two things that are (not coincidentally) crucial in both journalism and courts of law: the reconstruction of historical events and the determination of people's internal states.

Wait, I here you cry, are you claiming history is not a science? Well, no. What I am claiming (and the philosopher Anton Schopenhauer got there first) is that one cannot apply an experimental framework to individual point event in the past, and furthermore that the range of applicability of hypothetico-deductive methods is much more limited. And when attempting to ascertain the truth behind a single event, in the past, never to be repeated (such as a current event, or a crime), what are we left with? Testimony, correlation, hearsay. Also known as a case. Also known as a story. Neither Baconian empiricism, nor hypothetico-deductivism, nor Popperian faslificationism, will help us here. At best, they will allow us to evaluate some (though by no means all) the evidence. In essence, the repeatable experiment is a tool for erasing the singular nature of point events (and it is much more difficult to do then often presented).

This exact problem is one that plagues my original discipline of paleontology. Paleontology is, in many ways, history writ large. Except it is not writ, more sort of left lying around. Many of the events we would like to understand are unobservable, their consequence inferred from disparate sources of evidence observed in the here and now. And the paleontological literature is rife with people grappling with the problem of just how scientific this endeavor is. And they run the gamut, from people twisting Popper's theory into an unrecognizable pretzel to accommodate paleontological historicity, to people consigning vast tracts of what is normally considered part of paleo to the dustbin of unscientific speculation. Neither approach is satisfying, and both end up running into absurdity and intellectual sterility. Paleontology is largely historical, as such the best we can do is marshal evidence for competing narratives, and go with the most plausible. Some of that evidence will fit with more prescriptive definitions of the scientific method, most (and I count most cases of fossil discovery in this category) will not.

As for the reconstruction of the internal states of a person at a given time and place, science isn't even close to figuring that one out. Yet we, as humans, are always in the business of trying to figure out what's going on in another person's head. And, in the West at least, it is literature that has grappled most directly with this problem, through what one could describe as thought experiments, but that we usually call novels. As the philosopher Mary Midgley once wrote:

"That is why literature is such an important part of our lives - why the notion that it is less important than science is so mistaken, Shakespeare and Tolstoy help us to understand the self-destructive psychology of despotism. Flaubert and Racine illuminate the self-destructive side of love. What we need to grasp in such cases is not the simple fact that people are acting against their interests. We know that; it stands out a mile. We need to understand, beyond this, what kind of gratification they are getting in acting this way."

Ironically, two of the authors who most thoroughly embraces this task of literature, Emile Zola and Marcel Proust, both believed that their novels were eminently empirical and yes, scientific.Abandoning the notion that a one-size-fits-all epistemological framework derived from the physical sciences can be applied across all areas of possible human knowledge is not the same as saying "anything goes". Rather, it is a requirement that we be more rigorous, more critical, more demanding of the evidence and narratives presented to us given the constraints of what it is we are trying to understand and know. Indeed, the uncritical acceptance of a superficially science-y framework can be as dangerous for critical thought as any narrative-based understanding of the world (this is what Richard Feynman described as cargo cult science). We should have more that one tool in our epistemological kit. The world of things that are knowable is complex enough to need them.

PS: While researching this post, I found this delightful Schopenhauer quote we can probably all agree on:

"Newspapers are the second hand of history. This hand, however, is usually not only of inferior metal to the other hands, it also seldom works properly."

Labels:

philosophy of science,

probably wrong,

random musings,

science

Subscribe to:

Posts (Atom)